What is marching cubes?

Marching cubes is a surface extraction algorithm. This means that it generates a surface given a density function. Once we have a surface, we can render any density function as an image using a graphics library such as OpenGL. This was originally developed for medical uses, where a 3d scan could be turned into an image allowing doctors to see the scan more intuitively.How does it work?

Marching cubes generates a list of triangles present in a voxel ( a 3d pixel ). Each voxel is the smallest cube 'unit' of 3d space. Voxels are computed independently given their density values, so we just compute as many voxels as we need to display to the user. This can and should be parallelized for performance.

There are 3 main steps in the algorithm.

Step 1: Case Generation

We say that the volume is 'stuff' when the value is > 0, and air when the value is < 0. So we can represent each corner of a voxel with a bit. There are 8 corners, which allow for 2^8 = 256 different cubes. We generate a lookup table that goes from the case (the 8 bytes describing the configuration of the cube) to the number of edges that have mesh points on them. If this number is 0, we know that we are completely outside or inside the mesh, and thus quit early.Step 2: Interpolation

The next step is to loop through each incident edge that a triangle is on, and find the exact position of the vertex through interpolation of the density values. Simply interpolate the 3d position (and normal) using the densities. We need to find the spot where the density is 0; The value that is between air and stuff.Step 3: Vertex Stream

Once we have the positions of the vertices, we use another lookup table that goes from the case number to a list of edges. We then loop through this list and emit vertices using the interpolated values from before. This is required to get the vertex points in the right order for drawing.What does it look like?

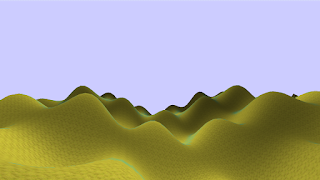

After implementing the steps above, we can render the surfaces of arbitrary functions. Some deterministic ones are below.Above we see a sin wave, a mix of sin and cos, and a gaussian with noise.

The more interesting functions use different octaves of random noise to produce complex yet coherent terrain, shown in the video below.

Implementation details

Geometry Shader

I first implemented this algorithm with a geometry shader. A geometry shader is a GPU program that takes in verticies and outputs different primitives. Therefore, my GS was given the voxel position verticies and then outputted multiple triangles. This was too slow as it was being regenerated each frame. To solve this, I needed to be able to store the geometry away and then re-render it as if it was a normal mesh.Compute Shader

The compute shader is a standalone shader. It works on arbitrary data, and is not part of the graphics pipeline. It took a very long time, but after learning about all the nuances of OpenGL 4.5, I was able to transform the geometry shader into a compute shader, allowing me to write to a SSBO ( Shader Storage Object Buffer), which I could then render as if it was a normal mesh. This improved performance a lot. It also meant that I could render arbitrary meshes that are potentially not on the screen. This is useful for 'unlimited' terrain rendering, which might cache precomputed chunks for later.Extras

Tri-Planar Texturing

One issue that came up was textures being stretched in the wrong direction. This is because I was sampling the texture only on the X and Z axes, which meant that a steep slope in the Y direction would stretch badly.To solve this, I used a technique called tri-planar texturing, which blends together 3 projections of the texture onto the X,Y,Z axes based off a weighted average of the normal. This prevents any stretching, because it chooses the right plane to project on to for the least amount of stretching.

Memory Management

In order to allow the player to explore the randomized terrain, I needed to implement a memory system that would allow the program to load in new chunks of the terrain, and automatically unload old chunks. I implemented a system that did this, by picking up chunks that are far away from the user and then moving them to where the user is looking, generating the correct mesh using the compute shader discussed above.LOD (Level of Detail)

LOD is the idea that a high vertex mesh far away isn't useful, as it takes up only a few pixels of screen space, but takes up a lot of computation. I added in a feature to the renderer that generated chunks with different amounts of detail depending on if it is far or close to the user, by manually changing the amount of voxels in a chunk. Each voxel then took up more world space as a result, reducing the vertex density.Final results

I uploaded a video to youtube, which shows all these features.The Future

Experiencing a user study

With the project coming to a close, it was time to think of improvements for the project and a perceptual studies that could be implemented in the future. I participated in the eye tracking perceptual study run by the Tobii masters students. They had me perform two tests. The first test was straight forward. I simply needed to focus on a highlighted object as it moved across the screen. There were different shapes and configurations presented. It was more of a statistical or reaction speed type of study.The second test dealt more with perception. It used eye tracking to implement LOD. The program would draw high polygon meshes around where the I was looking, and low polygon meshes in my peripheral vision. My goal as a participant was to tell if I could see a 'popping' effect, where the meshes go from low to high detail. The program sometimes used blurring techniques to hide this effect.

After participating in these studies, I came up with my own perceptual study that was related to the second test I did. It deals with terrain LOD using eye tracking.

Study

Eye tracking has been used in conjunction with LOD rendering to enhance the perceived quality of a scene while keeping the computational costs low. Since terrain involves a massive amount of geometry, it needs LOD schemes in order to run in real time and still feel expansive and open. Therefore, it is of interest to determine what details users can and can't tell differentiate when traversing through a terrain system.Can users tell the difference between multiple LOD eye tracking schemes with respect to quality?

If so, how much is this difference? And in what ways do they perceive the difference ( in quality, responsiveness, ect.)?

The perceptual study aims to address these questions.

Design

The experiment can be run with multiple types of LOD schemes. There are 3 major schemes that would be of interest to compare, each with their own varying constants, which will have to be determined with expertise.- Distance based rendering: The chunk LODs are determined solely by the distance from the person to the chunk. This is a classical LOD approach.

- Eye based rendering: The chunk LODs are determined solely by the optical angle at which the persons gaze is looking. Direct blocks will be rendered with high detail, blocks in the periphery will be rendered at lower quality.

- Hybrid rendering: A mix of the two techniques above.

The constants will be optimized for a specific machine. Once the distinct set of LOD schemes are determined, a world will be generated before test time, so that each person will see the same environment. For a fixed amount of time, they will not be able to control the camera. They will watch as the character in game goes along a set path. The live rendering will use the participants eye focus to determine the LOD rendering. After, the user will be allowed to freely roam the scene for a fixed time.

This process will cycle for multiple LOD schemes, and the user will be asked to differentiate between different scenes, which may or may not be the same.